Kernel Memory Ordering

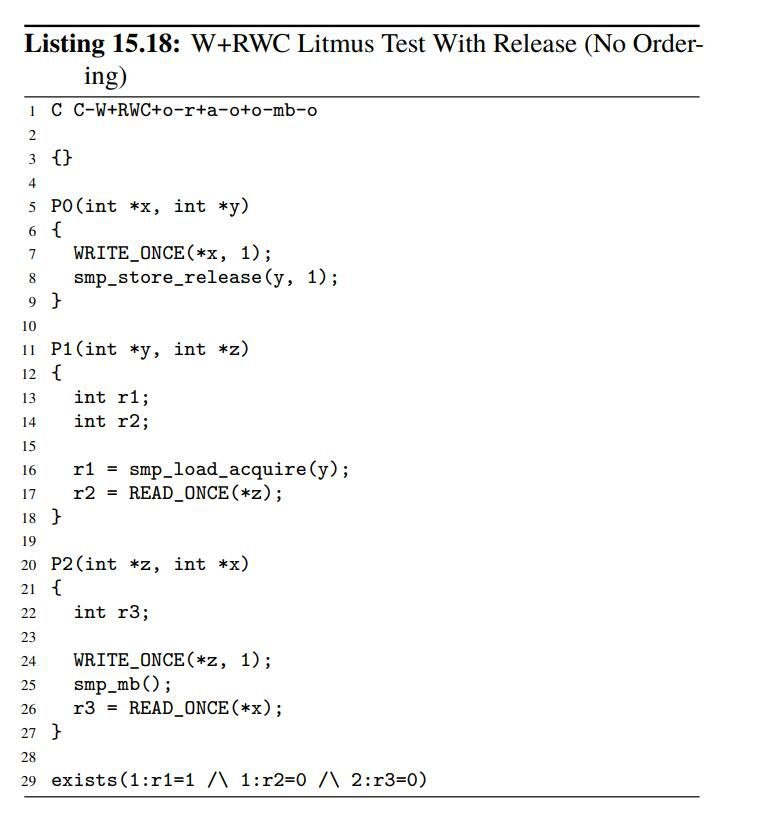

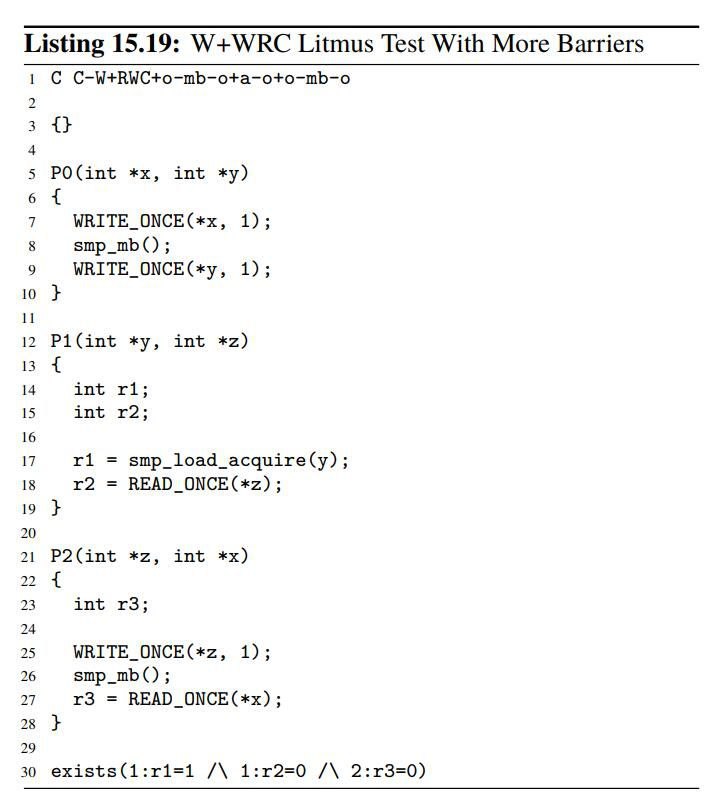

事情的起因是我无意间看到一个问题,下图中 15-18 的代码会触发存在 exists 所列出的情况,即同时存在 r1=1,r2=0,r3=0 的情况,而 15-19 的代码则不会,这两者唯一的区别就是 15-19 用 smp_mb() 和 WRITE_ONCE() 代替了 smp_store_release(),我找了不少资料,总算是能有一个勉强合理的解释,但因为对 CPU 实际的设计和实现并不了解,因此只把我的解释记录在这里。

Memory Ordering Model

CPU 对内存的操作可以分为两种:load 和 store。现代 CPU 为了优化性能,在硬件层面实现了各种黑魔法,比如多发射、乱序执行等等,这些优化使得程序执行的结果并非总是像代码那么直观。例如,在以下代码中,程序员总是预期观察到 a = 1 先于 r = b 发生,但实际结果却可能相反,r = b 先于 a = 1 发生。

1 | |

上述例子中,即便 load 先于 store 发生,看起来也不会带来什么危害,但在多处理器(SMP)的条件下,其危害就可能显现出来。

1 | |

在上述代码中,两个 load 操作都在相应的 store 操作之后,因此我们预期 r1 == 0 且 r2 == 0 这种情况不会发生,但由于 load 操作可能先于 store 发生,硬件在实际执行时可能是如下的顺序:

1 | |

在这种情况下,r1 == 0 且 r2 == 0 完全可能发生,如果软件工程师在写代码时没有预期到这种情况,则很可能导致代码最后运行出问题,并且一旦出现问题,是很难排查的。这就是硬件层面的优化带来的副作用,但是为了性能,我们只能苦一苦软件工程师,好在硬件工程师也考虑到了这种情况,为软件工程师提供了一些工具来解决这个问题。

为了描述和处理这种副作用,硬件工程师引入了一个术语 Memory Ordering。Memory Ordering 是从程序的视角看到的内存操作顺序,是硬件执行的结果。常见的内存序有以下几种:

- Strong Memory Ordering: 又叫 Program Ordering,也就是我们在代码定义中观察到的顺序,处理器中的读和写操作总是按照程序中的先后顺序发生,Intel 386 系列处理器使用这种内存序。

- Intel-64 Memory Ordering: Intel x86 为了提高性能而实现的内存模型,在大部分情况下和 Strong Memory Ordering 一致,但存在例外,因此相比于 Strong Memory Ordering 要弱,仅适用于 write-back cacheable 的内存。

- Weak Memroy Ordering:ARM v8-A 使用的内存模型,相比于 Intel-64 Memory Ordrering 更弱,同样也只适用于 write-back cachable 的内存。

对于软件工程师来说,最理想的显然是 Strong Memory Ordering,在这个基础上开发软件没有任何 Memory Ordering 方面的心智负担,但在性能和功耗的双重诱惑下,实际的硬件极少采用这种内存序。不同的硬件实现直接影响了 Memory Ordering 的表现,但对于软件工程师来说,他们并不想关心底层的硬件是如何实现 Memory Ordering 的,他们只关心如何解决 Memory Ordering 带来的副作用,因而定义了 5 种 primitive,软件可以通过合理使用这 4 种 Memory Barrier Primitive 来消除 Memory Ordering 带来的不确定性。Memory barrier primitive 可以分为以下 5 种 [https://elixir.bootlin.com/linux/v6.3/source/tools/memory-model/Documentation/explanation.txt]:

1 | |

这 4 种 primitive 看起来十分简单,到这里我们似乎已经可以回过头去回答最初的那个问题了,但答案好像并不是那么显然。从语义上来看,把 P0 中的 smp_store_release() 变成 smp_mb(); WRITE_ONCE(); 好像并没有什么意义,因为在此之前只有一个 store 操作,这个 store 操作既无法越过 smp_store_release() 也无法越过 smp_mb(),store 操作看起来总是有序的。

Observer and Muticopy Atomicity

为了解决这个问题,我们还需要回答另外一个问题:在一个 Processor 上看到的内存操作顺序一定和另一个 Processor 一致吗?答案是否定的,在这里我们必须引入一个新的概念,Observer。Observer 是内存的观察者,可以是 CPU 也可以是 DMA 控制器等,为了简化问题,我们后续只讨论所有 observer 都是 CPU(含义同 Processor,这里不做严格区分)的情况。

为什么不同的 Observer 可能看到不同的内存操作顺序呢?以 Intel CPU 为例,内存的写操作可能会暂存在 store buffer 中,而 store buffer 只对本 Processor 可见,对其他 Processor 是不可见的,这就导致在当前 Processor 看来某个地址的内存已经被更新,但在其他 Processor 上却还看不到这个更新的情况。Store buffer 并不是导致不同 observer 观察到内存更新顺序不一致的唯一原因,cache 硬件实现等也可能导致这类问题。

这里我们需要提到一个概念 Muticopy Atomicity [https://www.kernel.org/doc/Documentation/memory-barriers.txt]。Muticopy Atomicity 规定了当一个 store 操作对某个 CPU 可见时,必须同时对其他所有 CPU 可见,也就是一个 store 操作总是在某个时刻同时被所有 CPU 观察到。但现代 CPU 往往无法提供完全的 Muticopy Atomicity,而是不提供或者退而求其次实现了 Other Muticopy Atomicity,即一个 store 操作总是在某个时刻同时被所有其他 CPU 观察到。这里的其他 CPU 指的是除了执行该 store 操作的 CPU 以外的 CPU。但 Linux 内核并不要求硬件提供 Muticopy Atomicity,也就是我们并不能依赖 Multicopy Atomicity 去解决问题。但这并不意味着我们完全不能通过其他手段达到类似于 Muticopy Atomicity 的效果,generic memory barrier(smp_mb())可以保证在 barrier 之前的内存操作总是在执行 barrier 后的操作之前对所有 CPU 可见。也就是说,当某个 observer 观察到 generic memory barrier 之后的内存操作结果时,其他 CPU 一定已经能观察到 generic memory barrier 之前的内存操作的结果 [https://community.arm.com/arm-community-blogs/b/architectures-and-processors-blog/posts/memory-access-ordering-part-2---barriers-and-the-linux-kernel]。

到这里,我们就可以解释为什么使用 smp_mb() 的那个图不会发生了。当 P1 观察到 y == 1 时,由于 smp_mb() 的存在,所有的 processor 都一定能观察到 smp_mb() 之前的操作,即 x == 1。当 P1 又观察到 z == 0 时,意味着此时第 26 行 smp_mb() 还未执行完毕,否则 P1 一定能观察到 z == 1,这意味着 P2 在执行第 27 行时一定已经能观察到 x == 1,因此第 27 行的结果一定是 r3 == 1,不会发生 r3 == 0 的情况。

Release-Acquire ordering

虽然我们解决了使用 smp_mb() 的问题,但我们依然无法解决使用 smp_store_release() 的问题。但我们也可以发现,上述对于 primitive 的语义的说明似乎过于简单了,这些 primitive 似乎还会带来一些对于 observer 的副作用。这里我们回过头去找 C++ 对于 aquire 和 release 语义的定义 [https://en.cppreference.com/w/cpp/atomic/memory_order#Release-Acquire_ordering]:

1 | |

从 C++ 的定义来看,当存在多个 observer 时,release 操作需要与 acquire 操作同时使用,并且必须同时作用于同一个变量上,这样才能对执行 release 操作和 acquire 操作的两个 observer 进行同步,使他们对 release 和 acquire 操作前后的 order 达成一致,但 release-acquire 操作并不保证其他 observer 观察到相同的 order。到此,我们可以回过头再看使用 smp_store_release() 的那张图。

当我们观察到 r1 == 1 时,我们通过 release-acquire 的语义可知,P1 一定能观察到 x == 1,并且第 17 行 r2 = READ_ONCE(*z) 一定发生在观察到 x == 1 之后。同时,P1 观察到 z == 0,这时我们可以确定第 25 行 smp_mb() 还没有执行完成,否则其他 processor 一定能观察到 z == 1。但我们无法保证 P2 已经可以观察到 P0 的执行结果,P2 可能既没有观察到 x == 1 也没有观察到 y == 1,因此此时仍然可能发生 r3 == 0。

事实上,使用 smp_store_release() 的测试与该测试一致,仅变量命名不同。该测试的解释与我们之前的猜想一致,即 P2 并不一定能观察到 P0 的结果,Release-Acquire 并不能作为一个 global 的 ordering。

到此,我们解决了最初的问题,但此时我们仍然还有疑问,从 C++ 的定义来看,这些 primitive 还与架构有关,硬件究竟是如何实现这些 primitive 的呢?为了解决这个问题,我们直接编译相应的代码,并从汇编层面找到对应的硬件指令。

我们在内核里定义以下函数,并将其编译。

1 | |

Intel-64 Memory Ordering

在 Intel X86_64 上,编译的结果如下:

1 | |

通过汇编代码我们可以发现,Intel x86 的 store-release 和 load-aquire 与普通的 store 和 load 指令没有任何区别,smp_rmb() 和 smp_wmb() 为空指令,而 smp_mb() 则通过一条无意义的 lock 指令实现。Intel 不同代的 CPU 可能使用不同的 memory ordering,我们直接来看更先进的 CPU 使用的 Intel-64 Memory Ordering。Intel 在 SDM 里规定了 memory ordering 必须满足如下准则(fast-string operation 除外):

- Neither Loads Nor Stores Are Reordered with Like Operations

1

The Intel-64 memory-ordering model allows neither loads nor stores to be reordered with the same kind of operation. That is, it ensures that loads are seen in program order and that stores are seen in program order. - Stores Are Not Reordered With Earlier Loads

1

2The Intel-64 memory-ordering model ensures that a store by a processor may not occur before a previous load by

the same processor. - Loads May Be Reordered with Earlier Stores to Different Locations

1

2The Intel-64 memory-ordering model allows a load to be reordered with an earlier store to a different location.

However, loads are not reordered with stores to the same location. - Intra-Processor Forwarding Is Allowed

1

The memory-ordering model allows concurrent stores by two processors to be seen in different orders by those two processors; specifically, each processor may perceive its own store occurring before that of the other. - Stores Are Transitively Visible

1

The memory-ordering model ensures transitive visibility of stores; stores that are causally related appear to all processors to occur in an order consistent with the causality relation. - Stores Are Seen in a Consistent Order by Other Processors

1

the memory-ordering model allows stores by two processors to be seen in different orders by those two processors. However, any two stores must appear to execute in the same order to all processors other than those performing the stores. - Locked Instructions Have a Total Order

1

The memory-ordering model ensures that all processors agree on a single execution order of all locked instructions, including those that are larger than 8 bytes or are not naturally aligned. - Loads and Stores Are Not Reordered with Locked Instructions结合第 1 点和第 2 点和第 5 点,我们可以发现 store 操作之前所有的 store 和 load 操作都不会与该 store 重排,并且可以通过因果关系传递,这比

1

The memory-ordering model prevents loads and stores from being reordered with locked instructions that execute earlier or later.store-release的功能更强,同样 load 操作比 load-acquire 的功能更强。因此,在该内存模型下,所有除 fast-string 操作以外的 store 操作都是 store-release 操作,所有 load 操作都是 load-acquire 操作 [https://lwn.net/Articles/847481/],这也是为什么编译的结果中两者没有区别的原因。

对于 ARM 和 RISC-V,其结果和 x86 未必相同,先记个 TODO 在这里,等有时间了再去做。

Reference

- http://blog.chinaunix.net/uid-24683784-id-4394371.html

- https://www.open-std.org/jtc1/sc22/wg21/docs/papers/2016/p0124r1.html

- https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/tools/memory-model/Documentation/explanation.txt?h=v5.11&id=f40ddce88593482919761f74910f42f4b84c004b#n682

- https://lwn.net/Articles/844224/

- https://lwn.net/Articles/846700/

- https://github.com/paulmckrcu/litmus/blob/master/auto/C-LB-GWR%2BR-A.litmus

- https://diy.inria.fr/linux/Power8-LB-GWR+R-A.html